About Me

I am a Research Engineer at Hillbot. My research interests include high-fidelity simulation, 3D computer vision, and embodied AI. I work on developing simulation platforms for robotics systems, focusing on realistic rendering and physics modeling that bridge the gap between virtual and physical worlds. I graduated with an M.S. in Computer Science, specialized in Artificial Intelligence, from Stanford University, and a B.S. in Computer Science from UC San Diego, where I was fortunate to be advised by Prof. Hao Su.

Interests

- Simulation

- 3D Computer Vision

- Embodied AI

Education

M.S. Computer Science

Stanford University

B.S. Computer Science

University of California, San Diego

Publications

Given sparse unposed views, we leverage rich priors embedded in multiview diffusion models to predict their poses and reconstruct the 3D shape.

Chao Xu, Ang Li, Linghao Chen, Yulin Liu, Ruoxi Shi, Hao Su, Minghua Liu

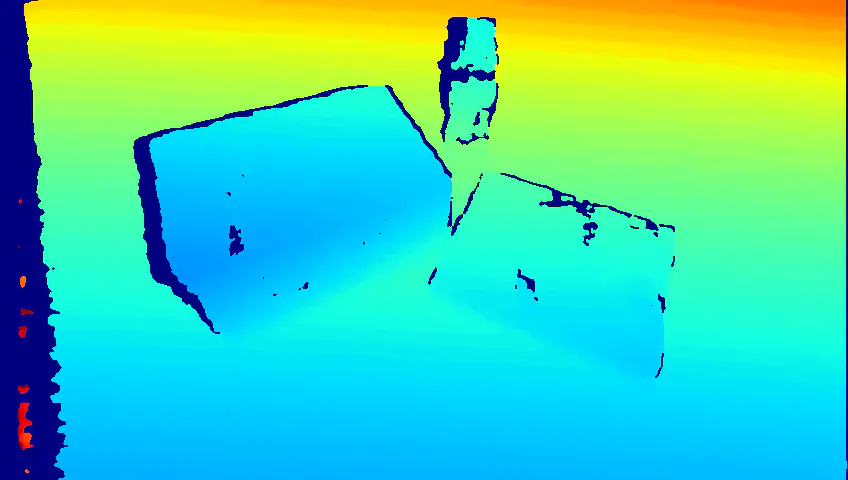

ECCV 2024SAPIEN Realistic depth lowers the sim-to-real gap of simulated depth and real active stereovision depth sensors, by designing a fully physics-grounded pipeline. Perception and RL methods trained in simulation can transfer well to the real world without any fine-tuning. It can also estimate the algorithm performance in the real world, largely reducing human effort of algorithm evaluation.

Xiaoshuai Zhang, Rui Chen, Ang Li, Fanbo Xiang, Yuzhe Qin, Jiayuan Gu, Zhan Ling, Minghua Liu, Peiyu Zeng, Songfang Han, Zhiao Huang, Tongzhou Mu, Jing Xu, Hao Su

T-RO 2023Selected Projects